Caption Images by A Pretrained Image-Captioning Model

Introduction to image-captioning model

The book, Deep Learning with PyTorch (Subchapter 2.3, A pretrained network that describes scenes), introduces an image-captioning model1, which can generate an English caption describing the input image:

… The model is trained on a large dataset of images along with a paired sentence description: for example, “A Tabby cat is leaning on a wooden table, with one paw on a laser mouse and the other on a black laptop.”

This captioning model has two connected halves. The first half of the model is a network that learns to generate “descriptive” numerical representations of the scene (Tabby cat, laser mouse, paw), which are then taken as input to the second half. That second half is a recurrent neural network (RNN) that generates a coherent sentence by putting those numerical descriptions together. The two halves of the model are trained together on image-caption pairs.

The second half of the model is called recurrent because it generates its outputs (individual words) in subsequent forward passes, where the input to each forward pass includes the outputs of the previous forward pass. This generates a dependency of the next word on words that were generated earlier, as we would expect when dealing with sentences or, in general, with sequences.

Briefly speaking, this image-captioning model looks like an end-to-end RNN trained by image(features)-caption(labels) data (supervised training).

Above model, named ImageCaptioning.pytorch, can be obtained from the GitHub repo2. It is a clone from Ruotian Luo’s repository3, and as said in the book, Luo’s model is an implementation of model NeuralTalk24 by Andrej Karpathy.

Caption images (model inference)

Caption one image

To caption image, that is realize a model inference—like ResNet-1015 and CycleGAN6 introduced in the book—based on the pretrained model ImageCaptioning.pytorch, we should download the repository2. After downloading, we could firstly have a glance at repository structure:

1

tree /F

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

G:.

│ dataloader.py

│ dataloaderraw.py

│ eval.py

│ eval_utils.py

│ opts.py

│ README.md

│ train.py

│

├─data

│ ├─FC

│ │ fc-infos.pkl

│ │ fc-model.pth

│ │

│ └─imagenet_weights

│ resnet101a.pth

│ resnet101b.pth

│

├─misc

│ resnet.py

│ resnet_utils.py

│ utils.py

│ __init__.py

│

├─models

│ Att2inModel.py

│ AttModel.py

│ CaptionModel.py

│ FCModel.py

│ OldModel.py

│ ShowTellModel.py

│ __init__.py

│

├─scripts

│ convert_old.py

│ prepro_feats.py

│ prepro_labels.py

│

└─vis

│ index.html

│ jquery-1.8.3.min.js

│

└─imgs

dummy

As can be seen, there’s a data folder in root folder, and at this point we can new a subfolder images in it. Then, we should put images to be captioned in images folder (or directly put images under data folder). For the first try, I only put one image, i.e. zebra.jpg6, in images:

After that, execute the command:

1

python eval.py --model .\data\FC\fc-model.pth --infos_path .\data\FC\fc-infos.pkl --image_folder .\data\images\ --dump_images 0 --batch_size 1

By the way, to make it run as expected, I installed two packages, i.e. h5py, scikit-image. When installing scikit-image, an error, ERROR: THESE PACKAGES DO NOT MATCH THE HASHES FROM THE REQUIREMENTS FILE., occurs due to unstable Internet connection7.

so we have:

1

2

3

4

5

6

7

8

9

10

11

DataLoaderRaw loading images from folder: .\data\images\

0

listing all images in directory .\data\images\

DataLoaderRaw found 1 images

G:\...\ImageCaptioning.pytorch-master\models\FCModel.py:147: UserWarning: Implicit dimension choice for log_softmax has been deprecated. Change the call to include dim=X as an argument.

logprobs = F.log_softmax(self.logit(output))

G:\...\ImageCaptioning.pytorch-master\models\FCModel.py:123: UserWarning: Implicit dimension choice for log_softmax has been deprecated. Change the call to include dim=X as an argument.

logprobs = F.log_softmax(self.logit(output))

image 1: a group of zebras standing in a field

evaluating validation preformance... -1/1 (0.000000)

loss: 0.0

where message on the 9th line shows that predicted caption for the image is “a group of zebras standing in a field”.

The result is so-so, not that accurate. Before further discussing model performance, some points about the inference command should be firstly introduced.

Option batch_size

At first, option batch_size specify the batch size while making model inference. Its default value is 10, and if the number of remained images is less than 10, script will automatically repeat images from the beginning in order. For example, if we don’t specify value for this option (that is adopt default value):

1

python eval.py --model .\data\FC\fc-model.pth --infos_path .\data\FC\fc-infos.pkl --image_folder .\data\images\ --dump_images 0

then we have results:

1

2

3

4

5

6

7

8

9

10

11

12

13

... ...

image 1: a group of zebras standing in a field

image 1: a group of zebras standing in a field

image 1: a group of zebras standing in a field

image 1: a group of zebras standing in a field

image 1: a group of zebras standing in a field

image 1: a group of zebras standing in a field

image 1: a group of zebras standing in a field

image 1: a group of zebras standing in a field

image 1: a group of zebras standing in a field

image 1: a group of zebras standing in a field

evaluating validation preformance... -1/1 (0.000000)

loss: 0.0

Batch size setting influences the model inference speed in a way—if the batch size is too small, total inference time will become long. However, if the total number of images to be captioned is relatively small, it’s better to specify a suitable batch size, as we set --batch_size 1 above.

Option dump_images

In the command, option dump_images determines “whether dump images into vis/imgs folder for vis”—value 1 (default) means “yes”, whereas 0 means “no”. However, on Windows systems, some errors will occur if we choose “yes”:

1

python eval.py --model .\data\FC\fc-model.pth --infos_path .\data\FC\fc-infos.pkl --image_folder .\data\images\ --batch_size 1

1

2

3

4

5

6

7

... ...

cp ".\data\images\zebra.jpg" vis/imgs/img1.jpg

'cp' 不是内部或外部命令,也不是可运行的程序

或批处理文件。

image 1: a group of zebras standing in a field

evaluating validation preformance... -1/1 (0.000000)

loss: 0.0

where printed information cp ".\data\images\zebra.jpg" vis/imgs/img1.jpg states that cp is not a valid command, although dump images or not doesn’t influence model inference. This error is caused by that command cp is not valid on Windows, we should use copy instead8.

Another point that would incur errors is the path delimiter / —we should replace it with \ on Windows.

In order to avoid both errors, we should modify the code snippet in file .\eval_utils.py:

1

2

3

4

5

6

7

# ...

if eval_kwargs.get('dump_images', 0) == 1:

# dump the raw image to vis/ folder

cmd = 'cp "' + os.path.join(eval_kwargs['image_root'], data['infos'][k]['file_path']) + '" vis/imgs/img' + str(len(predictions)) + '.jpg' # bit gross

print(cmd)

os.system(cmd)

# ...

to:

1

2

3

4

5

6

7

# ...

if eval_kwargs.get('dump_images', 0) == 1:

# dump the raw image to vis/ folder

cmd = 'copy "' + os.path.join(eval_kwargs['image_root'], data['infos'][k]['file_path']) + '" vis\imgs\img' + str(len(predictions)) + '.jpg' # bit gross

print(cmd)

os.system(cmd)

# ...

then rerun the command, we have:

1

2

3

4

5

6

... ...

copy ".\data\images\zebra.jpg" vis\imgs\img1.jpg

已复制 1 个文件。

image 1: a group of zebras standing in a field

evaluating validation preformance... -1/1 (0.000000)

loss: 0.

with no error any more.

However, I don’t know why and when there’s a need to dump images, so at last I decide to deactive this option as in the command.

Change caption-printing format

As showed before, predicted image captions will be displayed in text like image xx: xxxxxx. If the number of images increases, it’s difficult to identify which image is image 1, image 2, or image 3 etc. So, still in .\eval_utils.py file, we can change:

1

2

3

4

# ...

if verbose:

print('image %s: %s' %(entry['image_id'], entry['caption']))

# ...

to:

1

2

3

4

# ...

if verbose:

print('\"%s\": %s' %(data['infos'][k]['file_path'], entry['caption']))

# ...

then rerun the command, we could get the result:

1

2

3

4

... ...

".\data\images\zebra.jpg": a group of zebras standing in a field

evaluating validation preformance... -1/1 (0.000000)

loss: 0.0

on the host. More straightforward.

Captioning multiple images

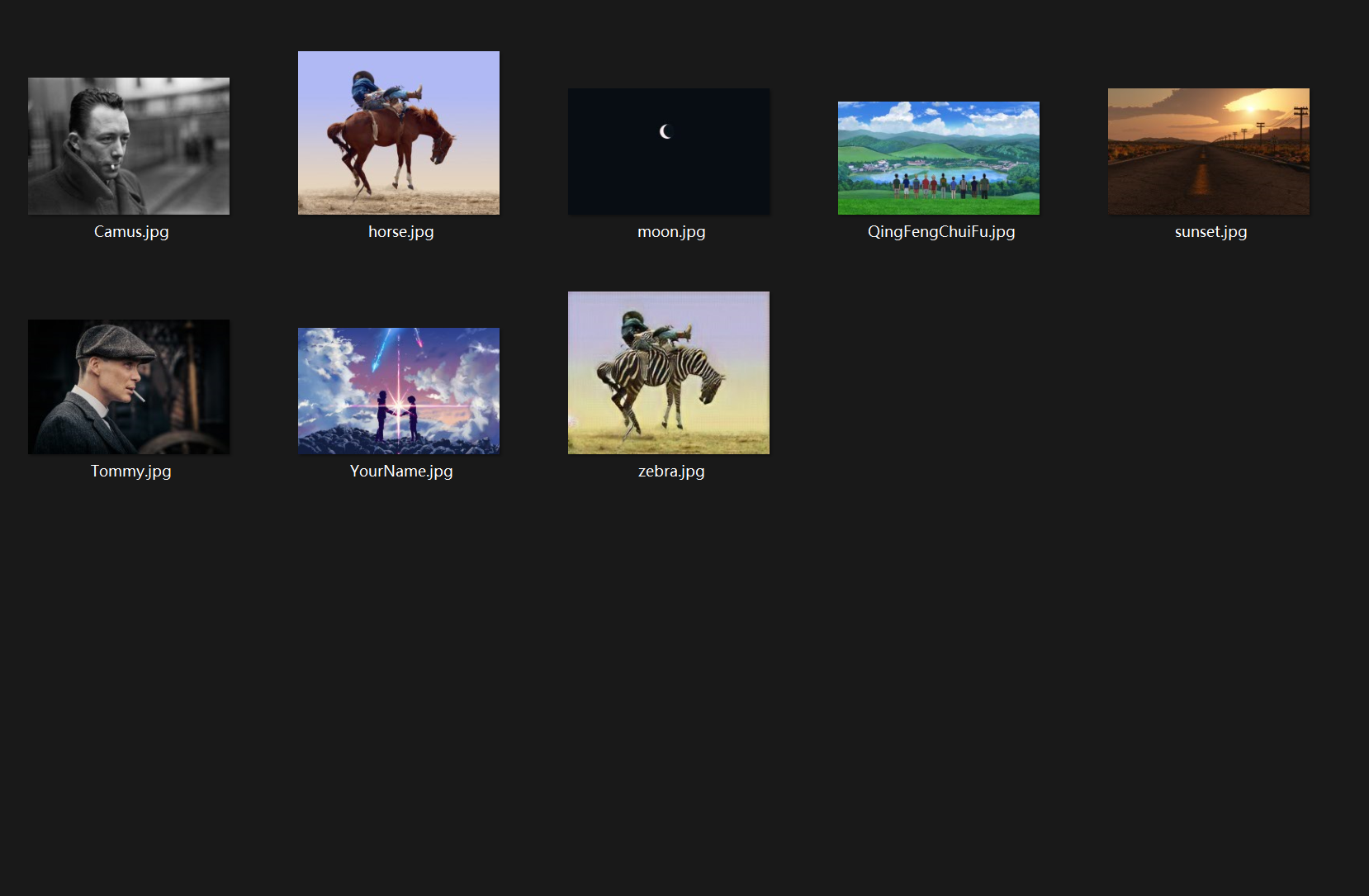

After above discussions, we could go further, captioning multiple images. I put 7 extra images, with different resolutions, in .\data\images folder:

and run following command to get results:

1

python eval.py --model .\data\FC\fc-model.pth --infos_path .\data\FC\fc-infos.pkl --image_folder .\data\images\ --dump_images 0 --batch_size 8

1

2

3

4

5

6

7

8

9

10

".\data\images\Camus.jpg": a man with a beard and a tie

".\data\images\horse.jpg": a person riding a horse on a beach

".\data\images\moon.jpg": a close up of a white and white plate

".\data\images\QingFengChuiFu.jpg": a couple of people standing on top of a lush green field

".\data\images\sunset.jpg": a flock of birds standing on top of a sandy beach

".\data\images\Tommy.jpg": a blurry photo of a blurry image of a person

".\data\images\YourName.jpg": a person is flying a kite in the sky

".\data\images\zebra.jpg": a group of zebras standing in a field

evaluating validation preformance... -1/8 (0.000000)

loss: 0.0

By the way, if an image has four channels, i.e. RGBA, an error will occur. At this point, we should convert it to that with three channels9.

In closing

Look at those images and their corresponding captions. Truth to be told, captioning ability of the model is really limited. The book attributes it to “insufficient” training samples—the model didn’t ever seen images with certain content while training. But on the other hand, believe such deep training technology, without too many rules, is fascinating and promising (and surely it is):

… it [caption ".\data\images\zebra.jpg": a group of zebras standing in a field for image zebra.jpg] got the animal right, but it saw more than one zebra in the image. Certainly this is not a pose that the network has ever seen a zebra in, nor has it ever seen a rider on a zebra (with some spurious zebra patterns). In addition, it is very likely that zebras are depicted in groups in the training dataset, so there might be some bias that we could investigate. The captioning network hasn’t described the rider, either. Again, it’s probably for the same reason: the network wasn’t shown a rider on a zebra in the training dataset. In any case, this is an impressive feat: we generated a fake image with an impossible situation, and the captioning network was flexible enough to get the subject right.

We’d like to stress that something like this, which would have been extremely hard to achieve before the advent of deep learning, can be obtained with under a thousand lines of code, with a general-purpose architecture that knows nothing about horses or zebras, and a corpus of images and their descriptions (the MS COCO dataset, in this case). No hardcoded criterion or grammar—everything, including the sentence, emerges from patterns in the data.

…

At the time of this writing, models such as these exist more as applied research or novelty projects, rather than something that has a well-defined, concrete use. The results, while promising, just aren’t good enough to use … yet. With time (and additional training data), we should expect this class of models to be able to describe the world to people with vision impairment, transcribe scenes from video, and perform other similar tasks.

Aforementioned three GitHub repos were created between 2015 and 2018 (see footnotes234, where repo creation time is obtained by GitHub REST API1011), and the book was published on 20201. During that period, image-captioning models or some models like this, as the book says, hadn’t become mature products. However in recent years, LLMs, like ChatGPTs, more functional and user-friendly, are booming. Deeper network structure and more training data. This kind of “small” model may be not appealing anymore, but to my mind, some fundamentals and flaws are basically the same. Anyway, it’s a good start for me to know about those LLMs.

References

-

Deep Learning with PyTorch, Eli Stevens, Luca Antiga, and Thomas Viehmann, 2020, GitHub repository: deep-learning-with-pytorch, pp. 33-35. ˄ ˄2

-

deep-learning-with-pytorch/ImageCaptioning.pytorch: image captioning codebase in pytorch(finetunable cnn in branch “with_finetune”;diverse beam search can be found in ‘dbs’ branch; self-critical training is under my self-critical.pytorch repository.), API: https://api.github.com/repos/deep-learning-with-pytorch/ImageCaptioning.pytorch (Created on 05 May, 2018; Last pushed on 03 July, 2024). ˄ ˄2 ˄3

-

ruotianluo/ImageCaptioning.pytorch: I decide to sync up this repo and self-critical.pytorch. (The old master is in old master branch for archive), API: https://api.github.com/repos/ruotianluo/ImageCaptioning.pytorch (Ceated on 10 Feb. 2017; Last pushed on 05 Oct. 2023). ˄ ˄2

-

karpathy/neuraltalk2: Efficient Image Captioning code in Torch, runs on GPU, API: https://api.github.com/repos/karpathy/neuraltalk2 (Created on 20 Nov. 2015; Last pushed on 07 Nov., 2017). ˄ ˄2

-

Make A Deep Learning Model Inference based on the Pretrained ResNet-101 - WHAT A STARRY NIGHT~. ˄

-

A Simple Example to Know about CycleGAN - WHAT A STARRY NIGHT~. ˄ ˄2

-

An Error Caused by Unstable Internet Connection when Installing Python Package:

ERROR: THESE PACKAGES DO NOT MATCH THE HASHES FROM THE REQUIREMENTS FILE.- WHAT A STARRY NIGHT~. ˄ -

https://github.com/wangshub/wechat_jump_game/issues/20#issuecomment-768050579. ˄

-

Convert 4D-array Image (with RGBA Channels) to Normal 3D-array Image by PIL - WHAT A STARRY NIGHT~. ˄

-

Get GitHub Repository Information (in JSON Format) through REST API - WHAT A STARRY NIGHT~. ˄

-

Three GitHub Repo Timestamps Obtained through REST API:

created_at,updated_at, andpushed_at(including a simple introduction to ISO 8601) - WHAT A STARRY NIGHT~. ˄